发表:2023 8th International Conference on Intelligent Computing and Signal Processing (ICSP)

作者:Chao Zhang, Jianmei Cheng

方向:突发事件智能感知、状态判别及应对

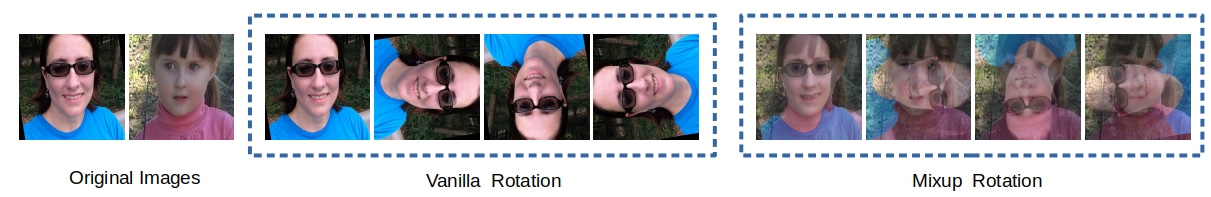

摘要:Ordinal classification is an important research topic that assigns instance with ordinal category. In practical applications, it greatly relies on supervised deep models, which require massive amount of labeled samples. In this paper, for small or moderate dataset, we use self-supervised learning(SSL) as a pretraining process to guide supervised learning. In selfsupervised pertraining, rotating image embedded with Mixup strategy enables model to learn richer feature representation. Pretrained model is learned by rotation difference between two combined images, instead of single image rotation. After pretraining, SSL helps supervised learning with the style of finetuning. Finally, we evaluate the effectiveness of pretraining paradigm and Mixup rotation strategy on two datasets (Adience and Carstyle) in the experiment, and achieve promising gains on classification performance.